- Documentation

- Video Call

- Upgrade using advanced features

- Distincitve features

- Customize the video and audio

- Customize how the video renders

Customize how the video renders

Introduction

When the ZEGOCLOUD SDK's built-in video rendering module cannot meet your app's requirement, the SDK allows you to customize the video rendering process. With custom video rendering enabled, the SDK will send out the video frame data captured locally or received from a remote stream so that you can use the data to perform the rendering by yourself.

Listed below are some scenarios where enabling custom video rendering is recommended:

- Your app uses a cross-platform framework that requires a complex UI hierarchy (e.g., Qt) or game engines like Unity, Unreal Engine and Cocos.

- Your app requires special processing of the video frame data for rendering.

Prerequisites

Before you begin, make sure you complete the following:

Create a project in ZEGOCLOUD Admin Console and get the AppID and AppSign of your project.

Refer to the Quick Start doc to complete the SDK integration and basic function implementation.

Implementation process

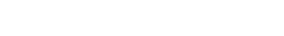

The process of custom video rendering is as follows:

- Create a "ZegoExpressEngine" instance.

- Enable the feature of custom video rendering. Set up the event handler for custom video rendering callbacks, and implement the callback handler methods according to your app's requirements.

- Log in to a room and start previewing the locally captured video or playing a remote stream. The SDK will then start sending out video frame data of the local or remote stream through the corresponding callback for custom video rendering.

Refer to the API call sequence diagram below to implement custom video rendering in your project:

)

Enable the Feature of Custom Video Rendering

First, create a ZegoCustomVideoRenderConfig object and configure its attributes according to your actual rendering requirements.

Set the "bufferType" (ZegoVideoBufferType) attribute to specify the video frame data type for custom rendering.

Set the "frameFormatSeries" (ZegoVideoFrameFormatSeries) attribute to specify the color space (RGB or YUV) of the video frame data for custom rendering.

Set the "enableEngineRender" attribute to determine whether the SDK's built-in renderer is also enabled. If it is set to "true", the SDK's built-in renderer will render the stream to the View passed to startPreview or startPlayingStream. If it is set to "false", the SDK's built-in renderer will not render the stream.

Then, call enableCustomVideoRender to enable custom video rendering.

ZegoCustomVideoRenderConfig videoRenderConfig = new ZegoCustomVideoRenderConfig();

// Send out video frame data in RAW_DATA format.

videoRenderConfig.bufferType = ZegoVideoBufferType.RAW_DATA;

// Specify the color space of video frame data as RGB.

videoRenderConfig.frameFormatSeries = ZegoVideoFrameFormatSeries.RGB;

// Enable the SDK's built-in renderer.

videoRenderConfig.enableEngineRender = true;

engine.enableCustomVideoRender(true, videoRenderConfig);Set up the Custom Video Rendering Callback Handler

Call setCustomVideoRenderHandler to set up an event handler (IZegoCustomVideoRenderHandler) to listen for and handle the callbacks related to custom video rendering.

Implement the handler method for the callback onCapturedVideoFrameRawData. The SDK sends out the video frame data captured locally through this callback for the custom rendering of the local preview.

Implement the handler method for the callback onRemoteVideoFrameRawData. The SDK sends out the video frame data received from a remote stream through this callback for the custom rendering of the remote stream playback.

engine.setCustomVideoRenderHandler(new IZegoCustomVideoRenderHandler(){

public void onCapturedVideoFrameRawData(ByteBuffer[] data, int[] dataLength, ZegoVideoFrameParam param, ZegoVideoFlipMode flipMode, ZegoPublishChannel channel){

// Use the data received from this callback to perform the custom video rendering for local preview.

...;

}

public void onRemoteVideoFrameRawData(ByteBuffer[] data, int[] dataLength, ZegoVideoFrameParam param, String streamID){

// Use the data received from this callback to perform the custom video rendering for remote stream playback.

...;

}

});The "flipMode" (ZegoVideoFlipMode) parameter of the callback "onCapturedVideoFrameRawData" indicates whether you need to flip the video so that the preview matches the video mirroring effect of the "mirrorMode" (ZegoVideoMirrorMode) setting of setVideoMirrorMode.

The "param" (ZegoVideoFrameParam) parameter of both callbacks above describes the attributes of the video frames, which are defined as below:

/**

* Video Frame Parameters

*

*/

public class ZegoVideoFrameParam {

/** The pixel format (e.g., I420, NV12, NV21, RGBA32, etc.) */

public ZegoVideoFrameFormat format;

/** The stride values of each color plane (e.g., for RGBA format, only the value of strides[0] needs to be used; while for I420, the values of strides[0,1,2] need to be used). */

final public int[] strides = new int[4];

/** The width of the video image */

public int width;

/** The height of the video image */

public int height;

/** The rotation angle of the video image */

public int rotation;

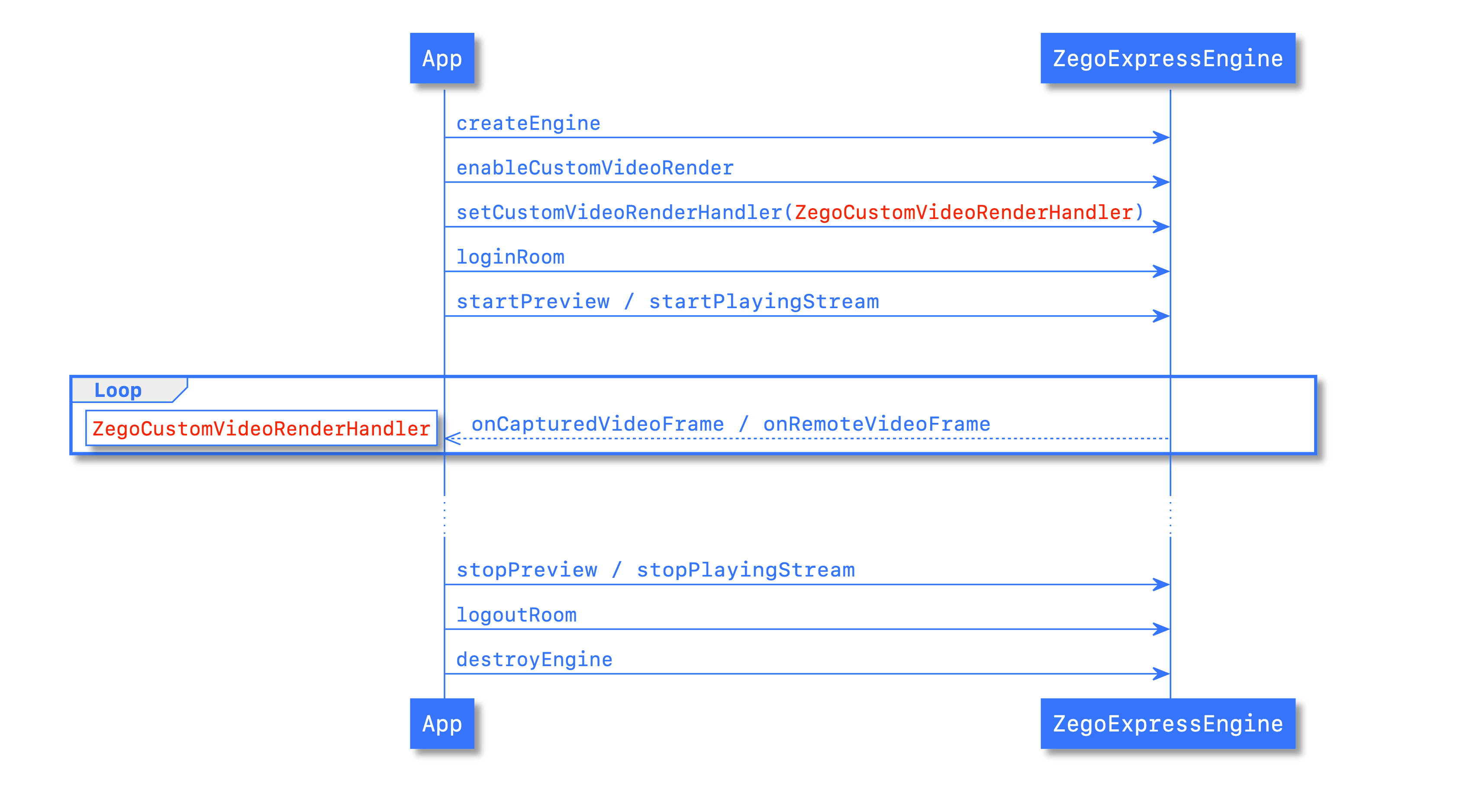

}The relationship between the stride and the image width is illustrated in the picture below:

)

Trigger the Custom Video Rendering Callback

After logging in to a room, you can start the preview of the local stream or the playback of a remote stream to trigger the custom video rendering callback.

Custom Video Rendering for Local Preview

With custom video rendering enabled, calling startPreview will trigger the callback onCapturedVideoFrameRawData through which the SDK sends out the video frame data captured locally. You can then use the video data obtained from this callback to render the local preview by yourself.

If the SDK's built-in renderer is enabled, you need to pass in a View as the "canvas" parameter when calling startPreview; otherwise, set the "canvas" parameter to "null".

After the preview is started, you can then start publishing the stream.

// If the SDK's built-in renderer is enabled, call [startPreview] with a View passed in as the `canvas` parameter. ZegoCanvas previewCanvas = new ZegoCanvas(textureViewLocalPreview);// textureViewLocalPreview is a TextureView UI object. ZegoExpressEngine.getEngine().startPreview(previewCanvas); // If the SDK's built-in renderer is NOT enabled, call [startPreview] with the `canvas` parameter set to null. ZegoExpressEngine.getEngine().startPreview(null); // After the preview is started, the SDK will start sending out video frame data for custom rendering through the callback [onCapturedVideoFrameRawData]. // Start publishing the stream. ZegoExpressEngine.getEngine().startPublishingStream(streamid);Custom Video Rendering for Remote Stream Playback

With custom video rendering enabled, calling startPlayingStream will trigger the callback onRemoteVideoFrameRawData through which the SDK sends out the video frame data received from a remote stream. You can then use the video data obtained from this callback to render the remote stream by yourself.

If the SDK's built-in renderer is enabled, you need to pass in a View as the "canvas" parameter when calling startPlayingStream; otherwise, set the "canvas" parameter to "null".

// If the SDK's built-in renderer is enabled, call [startPlayingStream] with a view object passed in as the `canvas` parameter. ZegoCanvas playCanvas = new ZegoCanvas(textureViewRemoteVideoView);// textureViewRemoteVideoView is a TextureView UI object. ZegoExpressEngine.getEngine().startPlayingStream(streamID, playCanvas); // If the SDK's built-in renderer is NOT enabled, call [startPreview] with the `canvas` parameter set to null. egoExpressEngine.getEngine().startPlayingStream(streamID, null); // After the stream playback is started, the SDK will start sending out video frame data for custom rendering through the callback [onRemoteVideoFrameRawData].

FAQ

What's the difference between custom video rendering for local preview and custom video rendering for remote stream playback?

Custom video rendering for local preview is performed on the stream publishing end to give the streamer a preview of the local stream with special rendering effects. Custom video rendering for remote stream playback is performed on the stream receiving end to provide the viewers with special rendering effects.

If the "enableEngineRender" attribute of ZegoCustomVideoRenderConfig is set to "false", what value should be passed to the "canvas" parameter when calling startPreview and "startPlayingStream"?

If "enableEngineRender" is set to "false", the engine's built-in renderer will not do any video rendering, so the "canvas" parameter can be set to "null".

When custom video rendering for local preview is enabled, will the processed video data for preview also get published out?

No. The custom rendering process only affects the local preview and does not affect the video data that are streamed out.

What is the width and height of the video frames for local preview custom rendering?

The width and height of the video frames for local preview custom rendering can be obtained from the "param" (ZegoVideoFrameParam) parameter of the custom video rendering callback, which should be the same as the video capture definition specified to call setVideoConfig or the engine's default video capture definition.

What is the pixel format of the video frame data for custom video rendering? Is the YUV format supported?

Both YUV and RGB are supported. As mentioned in step 4.1 above, you need to set the "frameFormatSeries" (ZegoVideoFrameFormatSeries) attribute to specify the color space (RGB or YUV) of the video frame data when calling enableCustomVideoRender to enable custom video rendering. The specific pixel format of the video frame data can be obtained from the "format" (ZegoVideoFrameFormat) attribute of the "param" (ZegoVideoFrameParam) parameter of the custom video rendering callbacks.

The supported pixel formats defined in ZegoVideoFrameFormat are listed below for your reference.

Pixel Format Description I420 YUV420P; 12bits per pixel.

It has 3 planes: the luma plane Y first, then the U chroma plane, and last the V chroma plane.

For a 2×2 square of pixels, there are 4 Y samples but only 1 U sample and 1 V sample.NV12 YUV420SP; 12bits per pixel.

It has two planes: one luma plane Y and one plane with U and V values interleaved.

For a 2×2 square of pixels, there are 4 Y samples but only 1 U sample and 1 V sample.NV21 NV21 is like NV12, but with U and V order reversed: it starts with V. BGRA32 BGRA is an sRGB format with 32 bits per pixel.

Each channel (Blue, Green, Red, and Alpha) is allocated 8 bits per pixel.RGBA32 Similar to GBRA; just the channels are in a different sequence (Red, Green, Blue, Alpha) ARGB32 Similar to GBRA; just the channels are in a different sequence (Alpha, Red, Green, Blue) ABGR32 Similar to GBRA; just the channels are in a different sequence (Alpha, Blue, Green, Red) I422 YUV422P;16bits per pixel;

It has three planes: one luma plane Y and 2 chroma planes U, V.

For a 2×2 group of pixels, there are 4 Y samples and 2 U and 2 V samples each.What is the frequency of the callbacks for custom video rendering? Is there anything I should pay attention to?

In general, the frequency of the callback for local preview custom rendering is the same as the frame rate set for stream publishing. But it also changes according to the frame rate set for traffic control (if such settings are enabled). The frequency of the callback for remote stream playback custom rendering changes according to the actual frame rate of the video data being received. For example, it may change due to any frame rate change on the stream publishing end for traffic control or any network stuttering on the stream receiving end.

How to get the data of the first frame for custom video rendering?

The data received from the first custom video rendering callback is the data for the first frame.

Is it possible to enable custom video rendering for local preview only and still let the ZEGO SDK handle the rendering for remote stream playback?

Yes. If the "enableEngineRender" attribute of ZegoCustomVideoRenderConfig is set to "true", the SDK's built-in renderer will also render the video data to the view specified in the "canvas" parameter of startPreview and startPlayingStream while sending out the video data for custom rendering.

Custom video rendering is enabled, but no video data callback is received for the local preview rendering. How to solve the problem?

Before you start publishing the stream, you need to call startPreview to trigger the custom rendering callback.

For local preview custom rendering, why is horizontal mirroring not done by default for the video frame data captured from the front camera?

For custom video rendering, you need to implement video mirroring by yourself. You can use the "flipMode" (ZegoVideoFlipMode) parameter of the custom rendering callback to determine whether mirroring is required.